HDFS Replication Tuning

Replication Strategy

When you create a HDFS replication job, Cloudera recommends setting the Replication Strategy to Dynamic to improve performance. The dynamic strategy operates by creating chunks of references to files and directories under the Source Path defined for the replication, and then allowing the mappers to continually request chunks one-by-one from a logical queue of these chunks. In addition, chunks can contain references to files whose replication can be skipped because they are already up-to-date on the destination cluster. The other option for setting the Replication Strategy is Static. Static replication distributes file replication tasks among the mappers up front to achieve a uniform distribution based on the file sizes and may not provide optimal performance.

When you use the Dynamic strategy, you can improve the performance of HDFS replication by configuring the way chunks are created and packed with file references, which is particularly important for replication jobs where the number of files is very high (1 million or more), relative to the number of mappers.

- Files per Chunk

-

When you have 1 million files or more in your source cluster that are to be replicated, Cloudera recommends increasing the chunking defaults so that you have no more than 50 files per chunk. This ensures you do not have significant "long-tail behavior," such as when a small number of mappers executing the replication job are working on large copy tasks, but other mappers have already finished. This is a global configuration that applies to all replications, and is disabled by default.

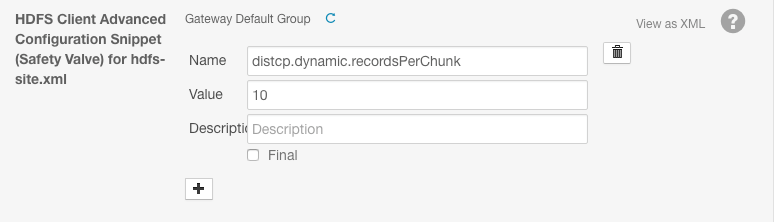

To configure the number of files per Chunk:- Open the Cloudera Manager Admin Console for the destination cluster and go to .

- Search for the HDFS Client Advanced Configuration Snippet (Safety Valve) for hdfs-site.xml property.

- Click

to add a new configuration.

to add a new configuration. - Add the following property:

distcp.dynamic.recordsPerChunk

Cloudera recommends that you start with a value of 10. Set this number so that you have no more than 50 files per chunk.

For example:

- Chunk by Size

-

This option overrides the Files by Chunk option. Any value set for distcp.dynamic.recordsPerChunk is ignored.

The effective amount of work each mapper performs is directly related to the total size of the files it has to copy. You can configure HDFS replications to distribute files into chunks for replication based on the chunk size. This is a global configuration that applies to all replications, and is disabled by default. This option helps improve performance when the sizes of the files you are replicating vary greatly and you expect that most of the files in those chunks have been modified and will need to be copied during the replication.

This Chunk by Size option can induce "long-tail behavior" (where a small number of mappers executing the replication job are working on large copy tasks, but other mappers have already finished) if a significant percentage of your larger files rarely change. This could, for example, cause some chunks to have only a single file that does not get copied because it did not change, which causes wasted execution time for the mappers.

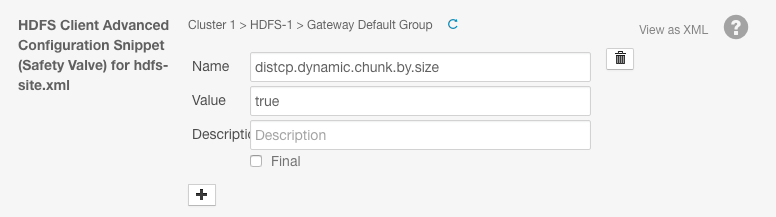

To enable Chunk by Size:- Open the Cloudera Manager Admin Console for the destination cluster and go to .

- Search for the HDFS Client Advanced Configuration Snippet (Safety Valve) for hdfs-site.xml property.

- Click

to add a new configuration.

to add a new configuration. - Add the following property and set its value to true:

distcp.dynamic.chunk.by.size

For example:

Replication Hosts

You can limit which hosts can run replication processes by specifying a whitelist of hosts. For example, you may not want a host with the Gateway role to run a replication job since the process is resource intensive.

- Open the Cloudera Manager Admin Console.

- Go to .

- Search for the following advanced configuration snippet: HDFS Replication Environment Advanced Configuration Snippet (Safety Valve).

- Specify a comma-seaparated whitelist of hosts in the following format: HOST_WHITELIST=host1.adomain.com,host2.adomain.com,host3.adomain.com.

- Save the changes.